The Greatest Story Ever Copied. Part VII: Consciousness and the Grand Simulation?

In Part VI, we engaged replicators and altruism, relativity, and convergence.

15. Consciousness, Propagons and The Importance of Observers in the Grand Simulation

One last grand foray: Let’s explore digital replication and consciousness.

Do we live in an equivalent of The Matrix – a simulated reality?

A couple of decades ago, Nick Bostrom offered an equation that left many to conclude we may be.[1] If you find the idea that you are a simulation distressing, let me offer some underexplored gaps in that story that may relieve you.

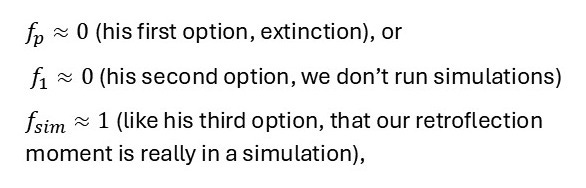

Ultimately, his equation left implied at least one of the following is true:

The human species is very likely to go extinct before reaching a “posthuman” stage; and/or

Any posthuman civilization is extremely unlikely to run a significant number of simulations of their evolutionary history (or variations thereof); and/or

We are almost certainly living in a computer simulation

Obviously, the first is distressing so we’d like to discount it. The second we’re implied seems unlikely. Accordingly, we need to conclude the third.

Although his math is cute and accessible, I think it misses an important pair of concepts: the ratios of modeled to real entities, modeled time to lived time, and degree of retroflection in simulated vs. real environments. He asks the likelihood our existence is a simulation. I ask, instead, the likelihood that when we are pondering that we are in a simulation. There’s a difference due to the number of observers, lifespan of observers, and propensity to retroflect.

To understand that gap, let’s recreate his logic, first.

Bostrom suggests that posthuman (or postmodern) civilizations will have vast computing power, and should be capable of simulating entities capable of experiencing consciousness. Then, he jumps into relatively simple math (if you hate this math, you can skip down to the section *** below without missing much),

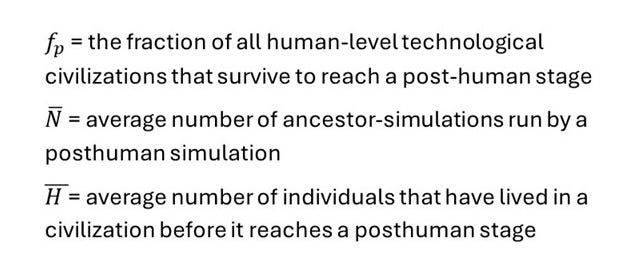

Definitions:

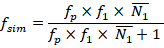

Then, the actual fraction of all observers with human-like experiences that live in simulations (fsim) is just the number of human line experiences divided by that plus human experiences.

He then restates terms. f1 is the fraction of posthuman civilizations that are interested in running ancestor-simulations and N1is the average number of ancestor simulations run by such interested simulations, N is then just their product.

Then,

He observes that because posthuman civilizations must have extraordinarily large computing power, N1 must be extremely large.

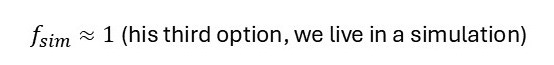

So that’s a fraction with a number in the numerator and the same number in the denominator plus 1. That has straightforward implications: if the number in the numerator is large, then the fraction is almost = 1; if the number in the numerator is almost zero, then the fraction is almost zero. Thus, his conclusions. One or more of three things must be true.

With a big N1, then the equation is almost 1 unless something cancels out the large N1.

However, something could cancel out the big N1 and make fsim close to 0. To do that, one of the other two terms in the numerator (fp or f1) have to be close to zero.

Restating something like his math in words – to his thinking, if we’re not going extinct and we can run simulations, then civilizations can run many simulations of conscious individuals. If so, the number of conscious individuals that are simulated vastly outnumber the number of non-simulated individuals. Therefore, the odds that any individual is a simulation are almost guaranteed.

This math and logic were sufficiently tight to cause anguish to many. Maybe not existential despair, it was just a little math “trick.” But, nonetheless, the revulsion of the concept of living in a simulation was visceral and profound, and thus the prospect concerning.

***

Although it’s elegant, I think it sweeps a trio of critical details under the rug. It fails to highlight the differences between simulations and reality in (1) duration, (2) # of conscious entities, (3) # of moments retroflectively engaging the question of reality/simulation. Restated: what if all simulations are only 1 minute long? What if all simulations only had 4 conscious entities in them? What if simulated consciousnesses never think about simulations?

Practically speaking, the question that interests me isn’t precisely Bostrom’s equation, but something close to it:

“As I take a few moments to contemplate, what are the odds this retroflection moment is in a simulation?

Not

“What are the odds that an entire system is a reality or a simulation?”

(If you hate math, skip ahead to ******)

More on the distinction in a moment.

Let’s recast Bostrom’s math to highlight.

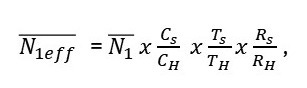

Let’s replace Bostrom’s fsim with f’sim with where the new term instead looks at the fraction of overall retroflection moments that are in simulations. To do that, we need to also change his N1 (the number of simulations run by interested posthuman civilizations) term to a new N1eff reflecting the number of retroflection moments within simulations run by interested posthuman civilizations.

Just like with Bostrom’s equation, 3 natural options still exist for this new equation.

But now we can reconsider the assumption he had built in. He had assumed his N1 (number of simulations run by interested posthuman civilizations) was extremely large. Let’s look at our N1eff, and question whether that is an equivalently large number.

N1eff needs to start with the # of simulated environments (N1), but then modify it to get to the number of retroflection events. We need to bias it up or down based on how many entities are in a simulation vs. reality, how long a life we simulate vs. how long we live, and how retroflective a simulation is compared to how retroflective we are.

Accordingly:

where the three modifiers are three ratios comparing simulations to reality in: # of conscious entities (C simulated vs. human), lifespan of entities (T simulated vs. human), and # of retrolflective moments per unit time (R simulated vs. human). Although Bostrom comments his N1 is large, it isn’t clear that our new is large N1eff. Before we tackle that difference, let’s consider some foundational differences between a simulation and reality.

*****

A simulation requires a reality to host it, not vice versa. The simulation can’t precede the host. Resource consumption is required in reality to host a simulation, thus simulations are unlikely to last longer than hosts. This puts a time bound on ends of simulations. The time of a simulation has to be shorter than a reality.

Further, it is likely to be much much shorter. Resources are required to run simulations. Accordingly, simulations are likely to be executed with discretion/intent/reason rather than haphazardly. This further reduces the time of a simulated system - but also reduces the number of conscious entities you might want to model within any system as well.

This puts a numeric bound on simulations and on entities within simulations, etc.

[Aside: we have yet to offer even a single “confirmed” conscious simulation (despite my musing above), so the underlying assumption that it can be done at all is still an important jump.]

Finally, it’s hard to fathom what post-human ethics entails, but there may be ethical constraints. Would a post-human civilization feel constrained about making conscious entities? En masse? Or, if it made conscious entities, to make them aware (or unaware) of their simulated nature?[3]

Overall:

Which is more populated, a simulation or reality?

Which has a longer life, a simulated entity or a real one?

Which is going to retroflect more, a simulated entity or a real one?

I don’t know these three comparative ratios. Arguably, they could swing dramatically biasing either toward or away from simulation. Resource limitations leave me reasonably inclined to think the first two substantially constrain simulations (and thus are much smaller than one).

Regarding the last: Which is going to retroflect more? If a simulation is “coarse,” it may be possible to infer readily from within the simulation that it is a simulation. Likewise, it may be possible for a simulated consciousness to communicate to others within that simulation that they are collectively in a simulation. Correspondingly, it’s possible only one moment of retroflection is necessary across that entire simulation – someone has a Eureka moment, tells everyone else, and retrospection is done. Unfortunately, if a simulation is high fidelity, it’s possible that no one could ever see the “seams” of reality to expose it as a simulation. It could be unproveable. How much time will consciousnesses in simulations spend thinking about unprovable subjects? (How much time do you spend thinking about which wins in a battle of an immovable object and an unstoppable force?)

Thus, I suspect the last ratio (relative retroflection) might be an exceedingly small number. Simulated entities may face disincentives to retroflect.

The product of three small numbers (ratios of # of entities, modeled time, and retroflection) vs. one big number (# of models) – could be big or small. I don’t know. And, due to that, I don’t know whether I think the odds are in favor of or against us living in a simulation.

Bostrom just gave us three outs (extinct, civilizations don’t model, or we’re in a simulation). I now offer those three plus three more (models are small, models are short, or modeled entities don’t retroflect) plus a fourth (some combination of those other three), meaning seven overall explanations.

That’s a somewhat foundationally different story. I think previous readers of Bostrom were left concluding:

“It’s self evident we’re likely in a simulation.”

And then an unfortunate corollary:

“That sucks. I want to take my blue pill and get on with my meager life.”

In contrast, I think my reframing leads to a far less clear situation.

“There are a lot of ways we could conclude we’re in in a simulation and a lot of ways we could conclude we’re not.”

Which leads to:

“It’s impossible to figure out. I guess I should get on from my retroflection and back to living.”

I don’t know about you, but that one puts a smile on my face.

16.Dr. Strange Replicator, or How I Learned to Stop Retroflecting and Love Outsourcing?

[1] Bostrom, N. (2003). Are you living in a computer simulation? Philosophical Quarterly, 53(211), 243–255. https://doi.org/10.1111/1467-9213.00309

[2] https://www.scientificamerican.com/article/stop-asking-if-the-universe-is-a-computer-simulation/

[3] Alternatively, could a post-human civilization feel somehow compelled/interested in specifically making consciousness? If consciousness is a good thing, and I can create it, how am I not compelled to make it? If consciousness is not a good thing, then is the extinction interpretation a requirement?